Ramp uses Modal to fine-tune their LLMs and scale batch processing. With Modal, Ramp was able to accelerate development of their text-to-structured-JSON model for receipt management, driving down receipts requiring manual intervention by 34%.

About Ramp

Ramp is rebuilding the CFO suite. It combines corporate cards and expense management, vendor management and price intelligence, procurement, bill payments, and accounting integrations into a unified platform designed to save time and money with every click. Businesses use Ramp as their primary spend management solution to fully automate non-payroll spend and streamline their financial operations. Time consuming tasks for finance teams like uploading receipts, paying vendors, and tracking spend are managed seamlessly in Ramp’s user-friendly interface.

The problem: Fine-tuning with custom code is a pain

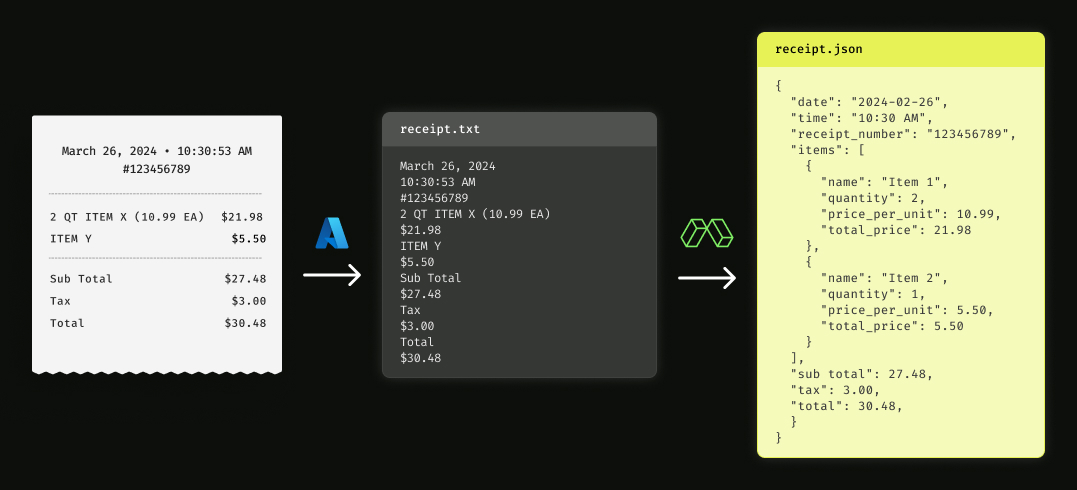

One of Ramp’s flagship products is its intelligent receipt submissions flow, which uses an LLM to transform OCR data to structured JSON.

Ramp initially tried LLM providers like OpenAI but were not able to get the customizability they wanted and were also concerned about cost, reliability, and security. They then considered using a fine-tuning API provider on open-source models, but quickly realized this black box approach lacked customizability.

Ramp realized they needed a platform that would grant them the flexibility to control each step of their fine-tuning workflow.

The solution: Improve model accuracy while saving cost

By adopting Modal, Ramp was able to quickly and confidently drive down receipts requiring manual intervention by 34% on infrastructure that was an estimated 79% cheaper than other major LLM providers like OpenAI.

As a generalized platform for running Python functions in the cloud, Modal gave Ramp the flexibility needed to create a custom experimentation framework. They set up Modal functions to:

- Train many candidate models in parallel

- Persist the weights from different fine-tuning runs into Modal volumes

- Serve an inference endpoint that could spin up the different models as needed based on a parameterized input

These critical use cases allowed the team to quickly evaluate performance across multiple model designs.

Modal was able to support this workflow by:

- Easily orchestrating infrastructure: Modal automatically handles scaling up and down GPUs, which traditionally would be a huge pain with major cloud providers

- Standardizing experiment environments: Modal allows users to easily define a containerized environment that can be attached to any Modal function

Bonus: Speeding up LLM batch processing

Outside of fine-tuning, the Ramp team also opportunistically found other use cases for Modal.

For instance, one engineer was faced with the daunting task of using an LLM to strip out PII on 25,000 invoices. A script that would’ve taken 3 days to complete the task locally was ported to a Modal function and parallelized on 256 cloud workers, which allowed the task to be completed in a mere 20 minutes at a cost of $100.

Companies are quickly recognizing that using ergonomic infrastructure for data-intensive applications can double developer productivity. With Modal’s serverless platform as a critical part of their data processing stack, Ramp is well-equipped to ship their AI features faster than ever before.